Publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2024

-

AutoMix: Automatically Mixing Language ModelsPranjal* Aggarwal, Aman* Madaan, Ankit Anand, and 10 more authorsIn To appear in Neural Information Processing Systems, 2024, 2024

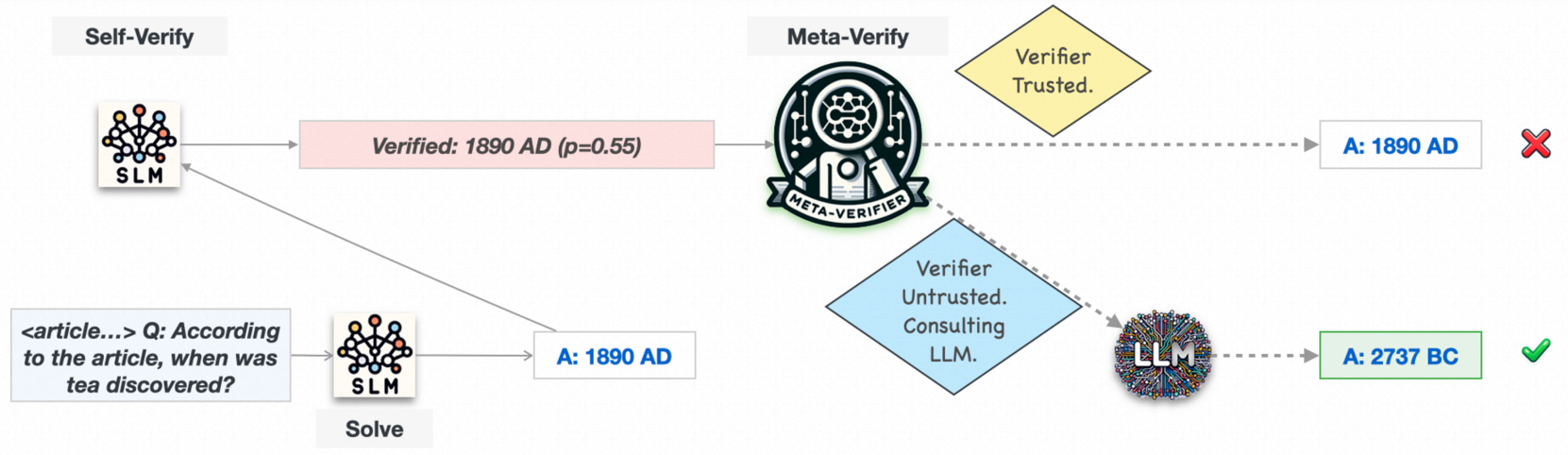

AutoMix: Automatically Mixing Language ModelsPranjal* Aggarwal, Aman* Madaan, Ankit Anand, and 10 more authorsIn To appear in Neural Information Processing Systems, 2024, 2024Large language models (LLMs) are now available from cloud API providers in various sizes and configurations. While this diversity offers a broad spectrum of choices, effectively leveraging the options to optimize computational cost and performance remains challenging. In this work, we present AutoMix, an approach that strategically routes queries to larger LMs, based on the approximate correctness of outputs from a smaller LM. Central to AutoMix are two key technical contributions. First, it has a few-shot self-verification mechanism, which estimates the reliability of its own outputs without requiring extensive training. Second, given that self-verification can be noisy, it employs a POMDP based router that can effectively select an appropriately sized model, based on answer confidence. Experiments across five language models and five challenging datasets show that AutoMix consistently surpasses strong baselines, reducing computational cost by over 50% for comparable performance.

@inproceedings{Madaan2023AutoMixAM, title = {AutoMix: Automatically Mixing Language Models}, author = {Aggarwal, Pranjal* and Madaan, Aman* and Anand, Ankit and Potharaju, Srividya Pranavi and Mishra, Swaroop and Zhou, Pei and Gupta, Aditya and Rajagopal, Dheeraj and Kappaganthu, Karthik and Yang, Yiming and Upadhyay, Shyam and Mausam and Faruqui, Manaal}, year = {2024}, booktitle = {To appear in Neural Information Processing Systems, 2024}, } -

GEO: Generative Engine OptimizationPranjal Aggarwal, Vishvak Murahari, Tanmay Rajpurohit, and 3 more authorsIn Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2024

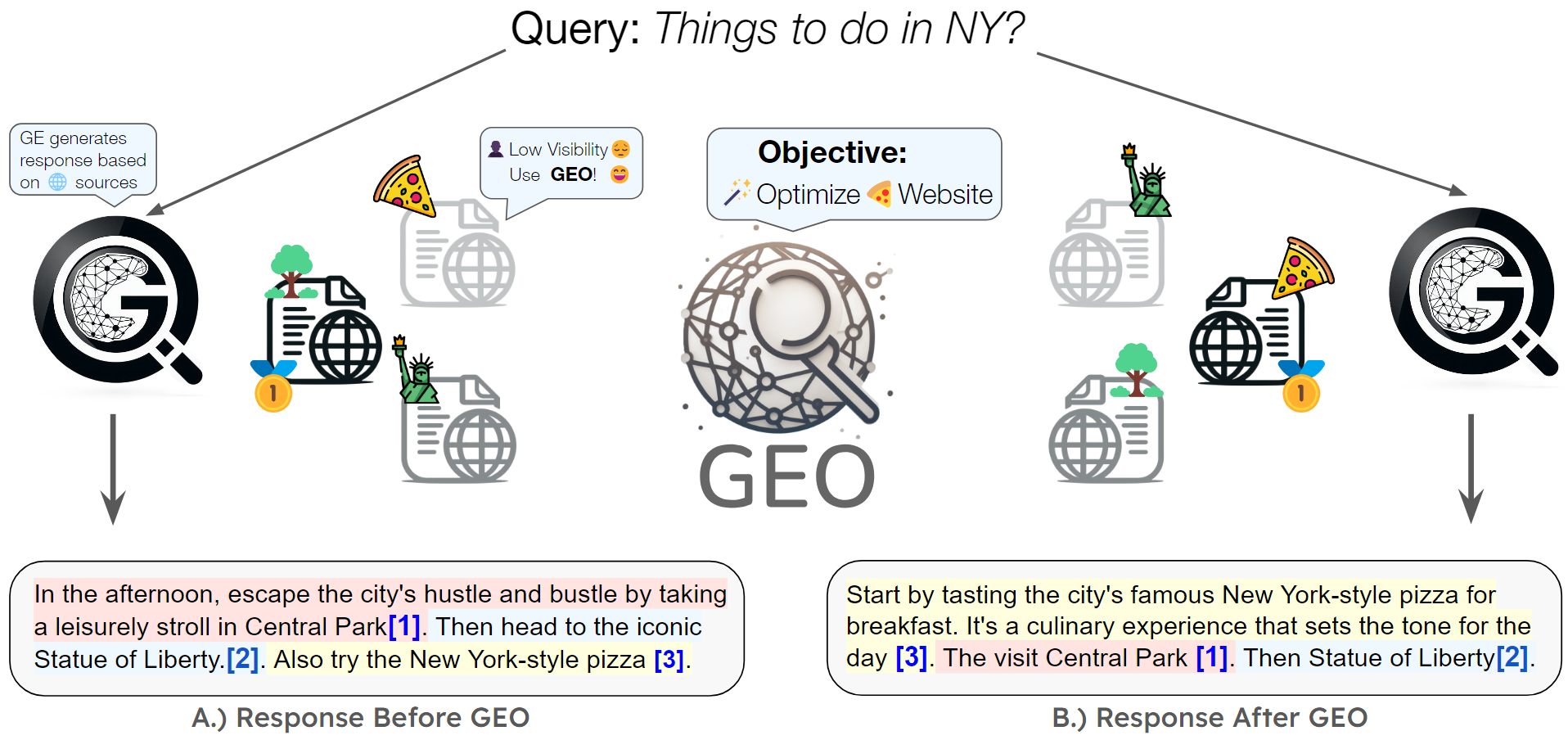

GEO: Generative Engine OptimizationPranjal Aggarwal, Vishvak Murahari, Tanmay Rajpurohit, and 3 more authorsIn Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2024The advent of large language models (LLMs) has ushered in a new paradigm of search engines that use generative models to gather and summarize information to answer user queries. This emerging technology, which we formalize under the unified framework of generative engines (GEs), can generate accurate and personalized responses, rapidly replacing traditional search engines like Google and Bing. Generative Engines typically satisfy queries by synthesizing information from multiple sources and summarizing them using LLMs. While this shift significantly improvesuser utility and generative search engine traffic, it poses a huge challenge for the third stakeholder – website and content creators. Given the black-box and fast-moving nature of generative engines, content creators have little to no control over when and how their content is displayed. With generative engines here to stay, we must ensure the creator economy is not disadvantaged. To address this, we introduce Generative Engine Optimization (GEO), the first novel paradigm to aid content creators in improving their content visibility in generative engine responses through a flexible black-box optimization framework for optimizing and defining visibility metrics. We facilitate systematic evaluation by introducing GEO-bench, a large-scale benchmark of diverse user queries across multiple domains, along with relevant web sources to answer these queries. Through rigorous evaluation, we demonstrate that GEO can boost visibility by up to 40% in generative engine responses. Moreover, we show the efficacy of these strategies varies across domains, underscoring the need for domain-specific optimization methods. Our work opens a new frontier in information discovery systems, with profound implications for both developers of generative engines and content creators.

@inproceedings{Aggarwal2023geo, author = {Aggarwal, Pranjal and Murahari, Vishvak and Rajpurohit, Tanmay and Kalyan, Ashwin and Narasimhan, Karthik and Deshpande, Ameet}, title = {GEO: Generative Engine Optimization}, year = {2024}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3637528.3671900}, booktitle = {Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining}, pages = {5–16}, numpages = {12}, keywords = {datasets and benchmarks, generative models, search engines}, location = {Barcelona, Spain}, series = {KDD '24}, } -

Demystifying Reinforcement Learning with Human FeedbackPranjal* Aggarwal, Shreyas* Chaudhari, Khanh Nguyen, and 4 more authorsIn Under Review, 2024

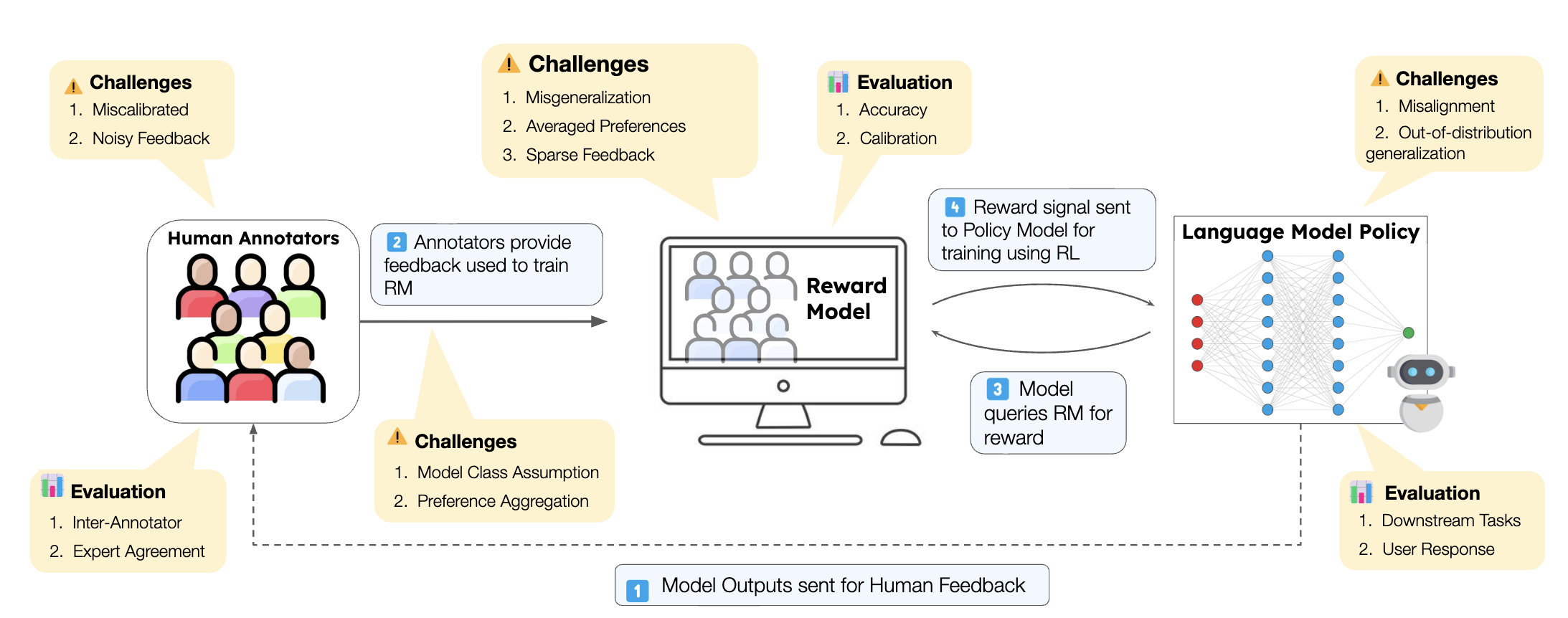

Demystifying Reinforcement Learning with Human FeedbackPranjal* Aggarwal, Shreyas* Chaudhari, Khanh Nguyen, and 4 more authorsIn Under Review, 2024State-of-the-art large language models (LLMs) have become indispensable tools for various tasks. However, training LLMs to serve as effective assistants for humans requires careful consideration. A promising approach is reinforcement learning from human feedback (RLHF), which leverages human feedback to update the model in accordance with human preferences and mitigate issues like toxicity and hallucinations. Yet, an understanding of RLHF for LLMs is largely entangled with initial design choices that popularized the method and current research focuses on augmenting those choices rather than fundamentally improving the framework. In this paper, we analyze RLHF through the lens of reinforcement learning principles to develop an understanding of its fundamentals, dedicating substantial focus to the core component of RLHF—the reward model. Our study investigates modeling choices, caveats of function approximation, and their implications on RLHF training algorithms, highlighting the underlying assumptions made about the expressivity of reward. Our analysis improves the understanding of the role of reward models and methods for their training, concurrently revealing limitations of the current methodology. We characterize these limitations, including incorrect generalization, model misspecification, and the sparsity of feedback, along with their impact on the performance of a language model. The discussion and analysis are substantiated by a categorical review of current literature, serving as a reference for researchers and practitioners to understand the challenges of RLHF and build upon existing efforts.

@inproceedings{Chaudhari2023demyst, title = {Demystifying Reinforcement Learning with Human Feedback}, author = {Aggarwal, Pranjal* and Chaudhari, Shreyas* and Nguyen, Khanh and Murahari, Vishvak and Kalyan, Ashwin and Narasimhan, Karthik and Deshpande, Ameet}, booktitle = {Under Review}, year = {2024}, }

2023

-

Let’s Sample Step by Step: Adaptive-Consistency for Efficient Reasoning and Coding with LLMsPranjal Aggarwal, Aman Madaan, Yiming Yang, and 1 more authorIn Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (Oral), Dec 2023

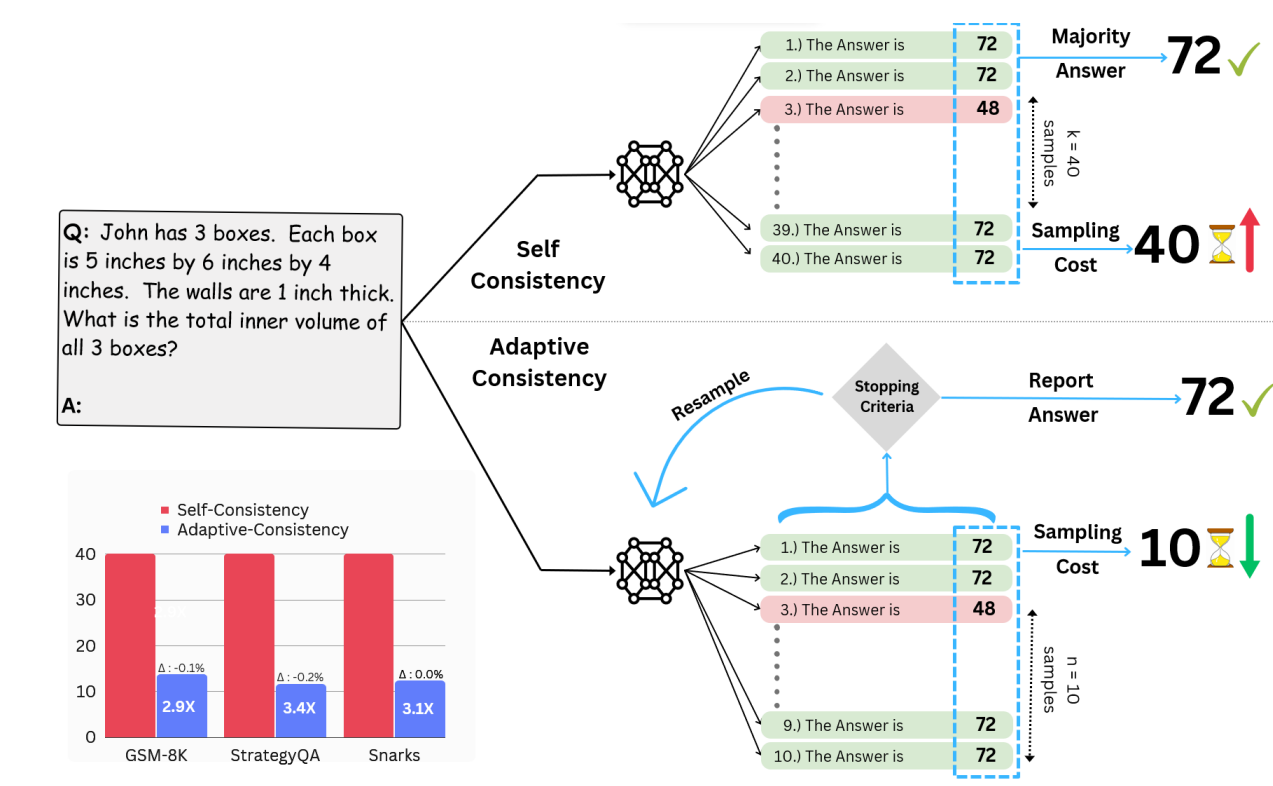

Let’s Sample Step by Step: Adaptive-Consistency for Efficient Reasoning and Coding with LLMsPranjal Aggarwal, Aman Madaan, Yiming Yang, and 1 more authorIn Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (Oral), Dec 2023A popular approach for improving the correctness of output from large language models (LLMs) is Self-Consistency - poll the LLM multiple times and output the most frequent solution. Existing Self-Consistency techniques always generate a constant number of samples per question, where a better approach will be to non-uniformly distribute the available budget based on the amount of agreement in the samples generated so far. In response, we introduce Adaptive-Consistency, a cost-efficient, model-agnostic technique that dynamically adjusts the number of samples per question using a lightweight stopping criterion. Our experiments over 17 reasoning and code generation datasets and three LLMs demonstrate that Adaptive-Consistency reduces sample budget by up to 7.9 times with an average accuracy drop of less than 0.1%

@inproceedings{Aggarwal2023LetsSS, title = {Let's Sample Step by Step: Adaptive-Consistency for Efficient Reasoning and Coding with LLMs}, author = {Aggarwal, Pranjal and Madaan, Aman and Yang, Yiming and {Mausam}}, booktitle = {Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (Oral)}, month = dec, year = {2023}, address = {Singapore}, publisher = {Association for Computational Linguistics}, pages = {12375--12396}, url = {https://sample-step-by-step.info/}, } -

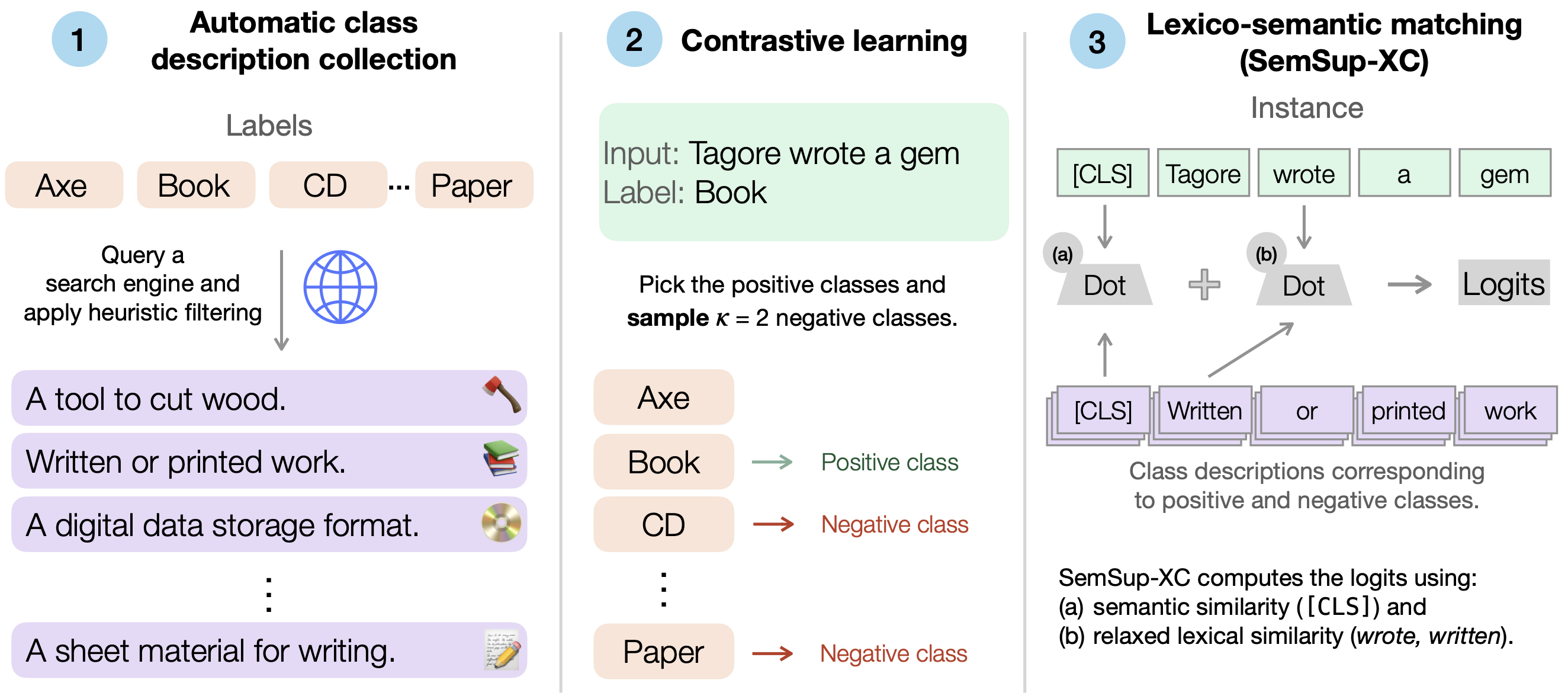

SemSup-XC: semantic supervision for zero and few-shot extreme classificationPranjal Aggarwal, Ameet Deshpande, and Karthik NarasimhanIn Proceedings of the 40th International Conference on Machine Learning, Dec 2023

SemSup-XC: semantic supervision for zero and few-shot extreme classificationPranjal Aggarwal, Ameet Deshpande, and Karthik NarasimhanIn Proceedings of the 40th International Conference on Machine Learning, Dec 2023Extreme classification (XC) involves predicting over large numbers of classes (thousands to millions), with real-world applications like news article classification and e-commerce product tagging. The zero-shot version of this task requires generalization to novel classes without additional supervision. In this paper, we develop SemSup-XC, a model that achieves state-of-the-art zero-shot and few-shot performance on three XC datasets derived from legal, e-commerce, and Wikipedia data. To develop SemSup-XC, we use automatically collected semantic class descriptions to represent classes and facilitate generalization through a novel hybrid matching module that matches input instances to class descriptions using a combination of semantic and lexical similarity. Trained with contrastive learning, SemSup-XC significantly outperforms baselines and establishes state-of-the-art performance on all three datasets considered, gaining up to 12 precision points on zeroshot and more than 10 precision points on oneshot tests, with similar gains for recall@10. Our ablation studies highlight the relative importance of our hybrid matching module and automatically collected class descriptions.

@inproceedings{Aggarwal2023SemSupXCSS, author = {Aggarwal, Pranjal and Deshpande, Ameet and Narasimhan, Karthik}, title = {SemSup-XC: semantic supervision for zero and few-shot extreme classification}, year = {2023}, booktitle = {Proceedings of the 40th International Conference on Machine Learning}, articleno = {11}, numpages = {20}, location = {Honolulu, Hawaii, USA}, series = {ICML'23}, url = {https://dl.acm.org/doi/10.5555/3618408.3618419}, } - Computer VisionDeep learning for detection of iso-dense, obscure masses in mammographically dense breasts.Pranjal* Aggarwal, Krithika* Rangarajan, Dhruv Kumar Gupta, and 7 more authorsEuropean radiology, Dec 2023

@article{Rangarajan2023DeepLF, title = {Deep learning for detection of iso-dense, obscure masses in mammographically dense breasts.}, author = {Aggarwal, Pranjal* and Rangarajan, Krithika* and Gupta, Dhruv Kumar and Dhanakshirur, Rohan Raju and Baby, Akhil and Pal, Chandan and Gupta, Arunkumar and Hari, Smriti and Banerjee, Subhashis and Arora, Chetan}, journal = {European radiology}, url = {https://pranjal2041.github.io/DenseMammogram/}, year = {2023}, paper = {https://link.springer.com/article/10.1007/s00330-023-09717-7}, doi = {10.1007/s00330-023-09717-7}, }